Settings That Disable Multicast

If you want multicast traffic to work at all on your virtual APs, there are two settings that need to be disabled:

- On the virtual AP profile, the setting "Drop broadcast and multicast" must be disabled. This one is self-explanatory. With this setting enabled, the SSID will not forward any multicast traffic from the wire to wireless clients, or from wireless clients to other wireless clients. UPDATE: after a tip from Paul Finlay with Aruba ERT, I went back and tested this. Multicast will work with the "drop broadcast and multicast" option enabled if clients request multicast via IGMP joins. Enabling this option will block unsolicited multicast but will still allow streams requested by clients.

- On the VLAN associated with a virtual AP, the setting "Broadcast/Multicast Optimization" must be disabled. When enabled, only some basic multicast groups, like VRRP, will be allowed.

If you're like me, you wonder why there are two options to drop multicast traffic. Why have the broadcast/multicast optimization setting if you can disable all multicast traffic for a virtual AP? The answer is because the VLAN setting applies to more than just virtual APs; it also applies to wired ports for things like Remote APs. If you want multicast to work for your wireless network, both must be disabled.

Normal Multicast

Without any special configuration, multicast traffic between wireless clients will work as long as the settings mentioned above are disabled. Traffic will be delivered to all clients on the WLAN, even if they aren't listening for it. As defined in the 802.11 standard, multicast and broadcast traffic is buffered at the AP or controller and is delivered at the DTIM, which by default is every beacon interval. The AP will let clients know that multicast traffic is coming after the beacon (wlan.tim.bmapctl.multicast == 1 is a handy Wireshark display filter for find beacons that indicate multicast traffic is coming).

The AP will then send the multicast frames at the lowest mandatory rate for the virtual AP.

|

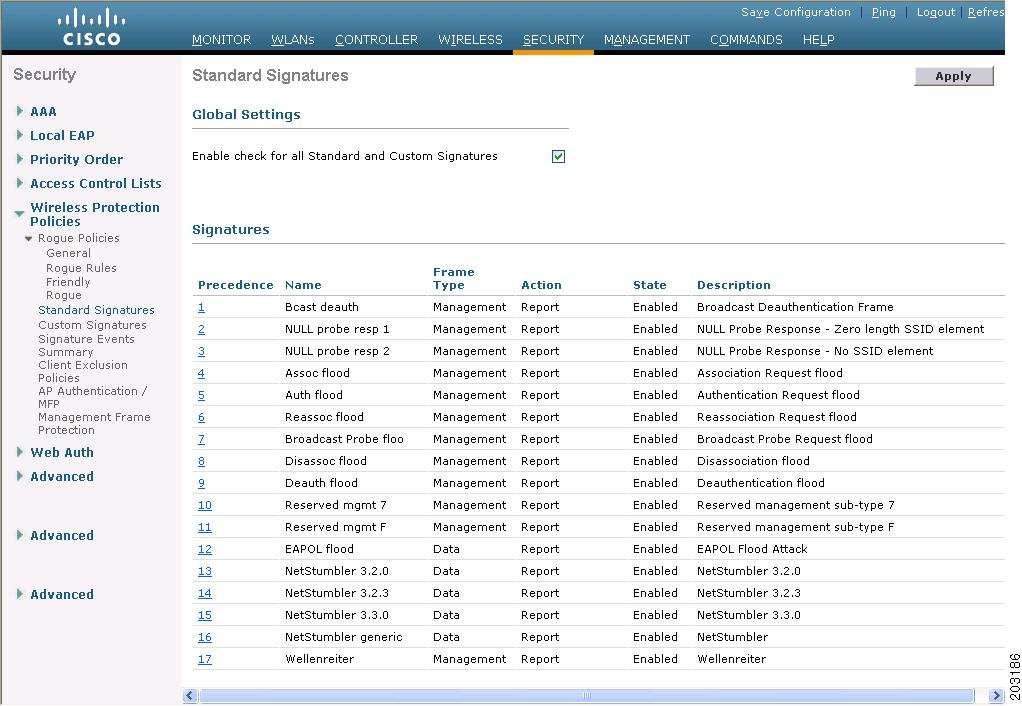

| Figure 1: Normal Multicast Traffic |

Note the data rate and Destination address fields in Figure 1.

The multicast traffic is sent at the lowest mandatory rate because that is what is required by the 802.11 standard, but what if there was a way to increase the data rate to make delivery of multicast traffic faster?

Multicast Rate Optimization

The first method that Aruba provides to boost multicast is Multicast Rate Optimization, which allows the mcast traffic to be sent at a higher data rate than the lowest mandatory rate for the SSID.

The way it works: Every second, the list of stations connected to the BSS is scanned and the lowest common transmit rate is found. That rate is then used for transmitting multicast traffic to the BSS. Traffic is still sent at the DTIM interval, and is still delivered over-the-air as a multicast frame.

Multicast Rate Optimization is configured on the SSID profile linked to the virtual-ap profile. In the GUI, you'll have to scroll down a bit to find the setting.

|

| Figure 2: Enabling BC/MC Rate Optimization in the SSID Profile |

Note that the data rate is now 24 Mbps instead of the 6 Mbps in Figure 1. A marked improvement in the speed for multicast traffic.

Dynamic Multicast Optimization

Regardless of the data rate chosen for multicast traffic, there are two limitations that can't be overcome: the traffic is sent only at the DTIM (every 100ms by default), and it can't be retransmitted in case of a failure. The first limitation can cause problems with voice applications. A standard voice codec like G.711 will send 50 packets per second. Because normal multicast can only be delivered at the DTIM, this can cause gaps in the audio stream, resulting in jitter. Unless a receiver has a buffer to smooth out this delay, the resulting audio quality can suffer. The second limitation is a result of the 802.11 standard. Broadcast and multicast frames are not acknowledged by receivers. Since they are not acknowledged, there's no way to tell of a client received the frame or not, and no mechanism for retransmitting the frame if a client didn't receive it.

If there was a way to transform multicast traffic to unicast traffic, it would solve both of these issues. Unicast traffic can be sent any time between DTIMs, and it can be acknowledged and retransmitted if necessary.

This mechanism is implemented as part of the 802.11v amendment and is known as Directed Multicast Service, or DMS for short. DMS relies on the clients connected to a BSS to request that multicast traffic be converted to unicast for them. Like any amendment, support for DMS on the client end is spotty. Other wireless vendors also have mechanisms for unicast conversion: with Cisco, it is called multicast-direct or media stream, depending on which document you read.

With Aruba, this mechanism is called Dynamic Multicast Optimization, or DMO for short. Multicast traffic is converted to unicast and sent directly to the clients that want the traffic. For DMO to work, IGMP proxy must be enabled on the VLAN mapped to the virtual AP (I say IGMP proxy because I work with ArubaOS 8 clusters. IGMP snooping might work for standalone controllers, but I haven't verified that.)

|

| Figure 4: IGMP Proxy |

In order to enable IGMP proxy, the VLAN interface must be configured with a valid IP address first. When enabling IGMP proxy, you must define the proxy interface, which is the port or port-channel the controller uses to send IGMP messages to the upstream switch (more on this later).

With IGMP proxy enabled, DMO can be enabled on the virtual-ap profile:

|

| Figure 5: Enabling DMO |

When enabling DMO, you must also specify the DMO threshold. This value sets the number of receiving clients above which DMO will be turned off in favor of using normal multicast delivery. This number is per active multicast group. For example, if more than 30 clients joined a single group, DMO would not be used to deliver the traffic to those clients, and it would fall back to using either normal multicast or multicast using mcast rate optimization discussed above. Let's look at a Wireshark capture with DMO enabled on the virtual AP.

|

| Figure 6: DMO enabled Multicast |

A skeptical reader may look at Figure 6 and say, "that's just unicast traffic." They would be correct, it is just unicast traffic, at MAC layer, but since this is a WPA2 network, I can't show you the higher layers. You'll just have to trust me. If you haven't figured it out by now, all of these captures were taken using Vocera badges while doing multi-user paging, which leverage multicast in the 230.230.0.0/20 range. Note that the data rate has increased again to an HT rate of 65 Mbps, and both the destination and receiver addresses are unicast.

DMO works best with HT-capable clients. A single non-HT station counts as 2 clients towards your DMO threshold, so if your receivers are all non-HT, you are more likely to hit the DMO threshold and have multicast traffic fall back to normal transmission methods.

In addition to DMO, there is Distributed DMO, or DDMO. DDMO applies when the forward-mode of a WPA2/3 virtual AP is set to decrypt-tunnel instead of tunnel. With decrypt-tunnel, unicast frames are sent from the controller to the AP unencrypted, and the encryption happens at the AP. Because of this, the unicast conversion has to happen at the AP. One distinction between DMO and DDMO: if an AP has more than one receiver of a multicast stream, with DDMO the controller only needs to send the frame to the AP once. The AP will then individually encrypt the frame for each receiver and send it as unicast. With regular DMO and tunnel forwarding mode, the controller must encrypt and send a copy of the frame for every client connected to that AP. In this way, DDMO is more efficient that DMO.

An Important Note about DMO: In order for DMO to work, the user role assigned to your clients must allow multicast traffic, including IGMP joins. The built-in role "voice" does not allow IGMP, and it was only until I found this reference that I was able to get DMO working by adding a new session ACL to the voice role.

Multicast and Clusters

There are some special considerations for multicast traffic when using ArubaOS 8 or above clusters. Cluster profiles (called lc-cluster profiles in the CLI) contain an option for a "multicast VLAN." I found the documentation around this option leaving something to be desired. so I dove into it. To best demonstrate what this option does, you have to look how IGMP proxy work with the upstream wired network.

When a wireless client wants to join a multicast stream, it will send an IGMP join. With IGMP proxy enabled, controller acting as the client's UAC will intercept the join request, and proxy it to the upstream wired network.

|

| Figure 7: Viewing Multicast Receivers |

On the client's UAC, you can use the command show ip igmp proxy-group to view the mutlicast groups the controller is aware of. Note that VLAN shown; it is the VLAN that the client is in, based on the VLAN set in the virtual AP. When you look at the controller's upstream switch, you will see the proxied IGMP join coming from the controller's VLAN 101 IP address:

|

| Figure 8: Upstream Switch IGMP Groups |

The "Last Reporter" IP address is the controller's VLAN 101 interface IP, partially redacted for...reasons. In all my examples so far, there has been only one multicast receiver. If there were more, they would be shown in the output of show ip igmp proxy-group on a controller, but there would still only be one group entry on the upstream switch.

Now let's look at a cluster that has the Mcast VLAN setting defined.

|

| Figure 8: Cluster Profile with MCAST-VLAN |

When a client joins a multicast stream on a cluster with an MCAST-VLAN value set in the cluster profile, the output of show ip igmp proxy-group looks different.

|

| Figure 9: Proxy Group with MCAST-VLAN |

Note the difference in the VLAN. Rather than the VLAN the client is in, the proxy-group now shows the value configured for the MCAST-VLAN of the cluster. Looking at the upstream switch reveals something similar:

|

| Figure 10: Upstream Switch with Cluster MCAST-VLAN |

Note that the IGMP group now shows VLAN 777, the VLAN configured in the cluster profile for the MCAST-VLAN. Please note that for this to work, IGMP Proxy must also be configured on the VLAN interface for the VLAN chosen. In this example, IGMP Proxy was enabled on the VLAN 777 controller interfaces.

Why go through this extra work in enabling the MCAST-VLAN on the cluster? First, with the MCAST-VLAN set, you only need to configure multicast routing (e.g., PIM) on the MCAST-VLAN router interface. You won't need to configure multicast routing on all the VLAN interfaces that the clients will reside on. The cluster will take care of sending the multicast streams to the clients. In my examples, you would only need PIM enabled on VLAN 777, and not on VLAN 101, or any other VLANs where clients might be.

Second, if you are using a named VLAN that has a list of VLAN ids on your virtual AP, if you don't use the cluster MCAST-VLAN, multiple copies of a stream could be delivered to clients. Consider a virtual AP mapped to a named VLAN that contains VLAN ids 10 and 20. Clients on that virtual AP want to receive multicast traffic, so IGMP joins are sent out on both VLAN 10 and 20. The upstream router will see those joins and a stream will be delivered to the controller on both VLANs 10 and 20. If you are not using DMO, or DMO crosses the threshold value, both VLAN 10 and VLAN 20 streams will be delivered using "normal" multicast on the virtual AP. Not only is it now very inefficient, it could cause the clients to fail in receiving the stream. The bottom line: If you have virtual APs using named VLANs with multiple VLAN ids, and clients on those virtual APs need to receive multicast, I would recommend using the MCAST-VLAN value in the cluster profile.

An Important Note About Using the MCAST-VLAN Setting

If you specify a VLAN id for the mcast-vlan value in the cluster profile that is different than the source vlan for multicast stream, your upstream network must be able to route the mcast traffic between those VLANs.

In the examples above, the mcast-vlan was set to 777. If the source of the multicast traffic was for example VLAN id 132, the upstream network must be capable of routing the multicast traffic in order for all the clients on the cluster to receive the stream. I highlighted cluster here because for receiving clients that have a different UAC than the multicast source (if it is a wireless client on the cluster), the stream won't get delivered to them unless the upstream wired network can mcast route between vlan 132 and VLAN 777.

Conclusion

This has been a long and technical blog post on multicast with Aruba controller-based wireless. I hope that the reader has gained some understanding of how to optimize multicast with Aruba, and if you have any questions or corrections, please leave a comment.